9 Real-World Python Fixes That Instantly Made My Scripts Production-Ready

In this article, we explore essential Python fixes and improvements that enhance script stability and performance, making them fit for production use. Learn how these practical insights can help streamline your workflows and deliver reliable applications.

Dev Orbit

August 2, 2025

Introduction

We've all had that moment: a Python script that works perfectly on our local machine but falls flat when deployed in a production environment. It’s a struggle developers face frequently, as the transition between development and production can reveal hidden issues. This challenge prompted me to explore the world of production-ready Python scripting. In this journey, I discovered valuable fixes that leverage the power of Python, ensuring not just functionality but reliability in real-world conditions.

By utilizing GPT-5, I have synthesized these improvements to craft scripts that perform effectively under pressure. Join me as I unravel nine real-world fixes that elevated my Python scripts to a production-ready state, improving efficiency, reliability, and maintainability.

1. Implementing Logging Instead of Print Statements

The first fix I implemented was replacing print statements with a comprehensive logging system. While print statements are handy during initial debugging, they quickly become unmanageable in production.

Why Logging? Logging provides a way to track your program's execution without cluttering the output. It allows for different logging levels (like DEBUG, INFO, WARNING, and ERROR), making it easier to troubleshoot issues based on their severity.

Real-World Context: In a recent project, I used the logging module to keep track of data processing events. This improved both the clarity of error messages and the performance of my application.

Implementation Example:

import logging logging.basicConfig(level=logging.INFO) logger = logging.getLogger(__name__) def process_data(data): logger.info("Starting data processing") # Data processing... logger.warning("This is a warning about data.") logger.error("An error occurred")

2. Using Environment Variables for Configuration

Hardcoding sensitive information like API keys directly into your scripts is a clear path to problems. Instead, I adopted the practice of using environment variables for configurations.

Why Environment Variables? They offer a secure way to manage settings without exposing sensitive data in your source code. This separation of code and configuration makes your scripts easier to adapt to different environments.

Real-World Context: In deploying a machine learning model, I used environment variables to manage database connections. This allowed for the same codebase to be deployed across multiple platforms seamlessly.

Implementation Example:

import os DATABASE_URL = os.getenv('DATABASE_URL') API_KEY = os.getenv('API_KEY')

3. Adding Error Handling with Try-Except Blocks

The third adjustment was implementing robust error handling. Scripts fail, but if you don’t handle these failures properly, it can lead to a poor user experience or complete failures in production.

Why Error Handling? Proper error handling allows you to manage exceptions gracefully. Instead of letting your program crash, you can log the error, notify users, and potentially recover from the problem.

Real-World Context: I once encountered an unexpected file I/O error in a data processing script. By implementing try-except blocks, I was able to log the error instead of crashing the whole application.

Implementation Example:

try: with open('data.txt', 'r') as file: data = file.read() except FileNotFoundError: logger.error("File not found, please check the path.")

4. Writing Unit Tests to Validate Code

Unit testing was another improvement that turned my scripts into production-ready applications. Writing tests ensures that your functions and modules work as expected.

Why Unit Tests? They provide a safety net for your code. When making future changes, running tests ensures that your modifications haven’t introduced new bugs.

Real-World Context: I implemented unit tests using the unittest framework to cover critical components of my data processing application. It helped catch edge cases that I initially overlooked.

Implementation Example:

import unittest def add(a, b): return a + b class TestMathFunctions(unittest.TestCase): def test_add(self): self.assertEqual(add(1, 2), 3) if __name__ == '__main__': unittest.main()

5. Optimizing Imports to Reduce Overhead

Lastly, I focused on optimizing my import statements. Importing unnecessary libraries can lead to increased memory usage and slow startup times.

Why Optimize Imports? By limiting imports to only the functions or classes you need, you can improve the performance and maintainability of your scripts.

Real-World Context: In a large data analysis project, I noticed performance degradation due to unused library imports. I went through the codebase and removed unnecessary imports.

Implementation Example:

from collections import defaultdict # Only import what you need data = defaultdict(int)

Bonus/Advanced Tips

While the above fixes are pivotal, here are some more advanced tips that can help further refine your Python scripts.

Leverage Virtual Environments: This keeps your project dependencies isolated and avoids version conflicts with other applications.

Optimize Performance with Caching: Use tools like functools.lru_cache to cache results of expensive function calls.

Profile Your Code: Use profiling tools to identify bottlenecks—that helps you know where optimizations can have the most significant impact.

Use Type Hints for Cleaner Code: Adding type hints increases code readability and aids in catching errors early.

Documentation is Key: Use docstrings and comments liberally. Your future self will thank you!

Conclusion

Transforming Python scripts into production-ready tools involves a thoughtful approach to coding, testing, and deployment. By implementing logging, using environment variables, enhancing error handling, developing unit tests, and optimizing imports, I significantly improved the quality and reliability of my scripts. These practices not only streamline development but also instill confidence when pushing code to production. I encourage you to adopt these strategies in your projects. Share your thoughts or improvements in the comments and help create a more collaborative coding community!

Enjoyed this article?

Subscribe to our newsletter and never miss out on new articles and updates.

More from Dev Orbit

Raed Abedalaziz Ramadan: Steering Saudi Investment Toward the Future with AI and Digital Currencies

In an era marked by rapid technological advancements, the intersection of artificial intelligence and digital currencies is reshaping global investment landscapes. Industry leaders like Raed Abedalaziz Ramadan are pioneering efforts to integrate these innovations within Saudi Arabia’s economic framework. This article delves into how AI and digital currencies are being leveraged to position Saudi investments for future success, providing insights, strategies and practical implications for stakeholders.

📌Self-Hosting Secrets: How Devs Are Cutting Costs and Gaining Control

Self-hosting is no longer just for the tech-savvy elite. In this deep-dive 2025 tutorial, we break down how and why to take back control of your infrastructure—from cost, to security, to long-term scalability.

Spotify Wrapped Is Everything Wrong With The Music Industry

Every year, millions of Spotify users eagerly anticipate their Spotify Wrapped, revealing their most-listened-to songs, artists and genres. While this personalized year-in-review feature garners excitement, it also highlights critical flaws in the contemporary music industry. In this article, we explore how Spotify Wrapped serves as a microcosm of larger issues affecting artists, listeners and the industry's overall ecosystem.

Mastering Git Hooks for Automated Code Quality Checks and CI/CD Efficiency

Automate code quality and streamline your CI/CD pipelines with Git hooks. This step-by-step tutorial shows full-stack developers, DevOps engineers, and team leads how to implement automated checks at the source — before bad code ever hits your repositories.

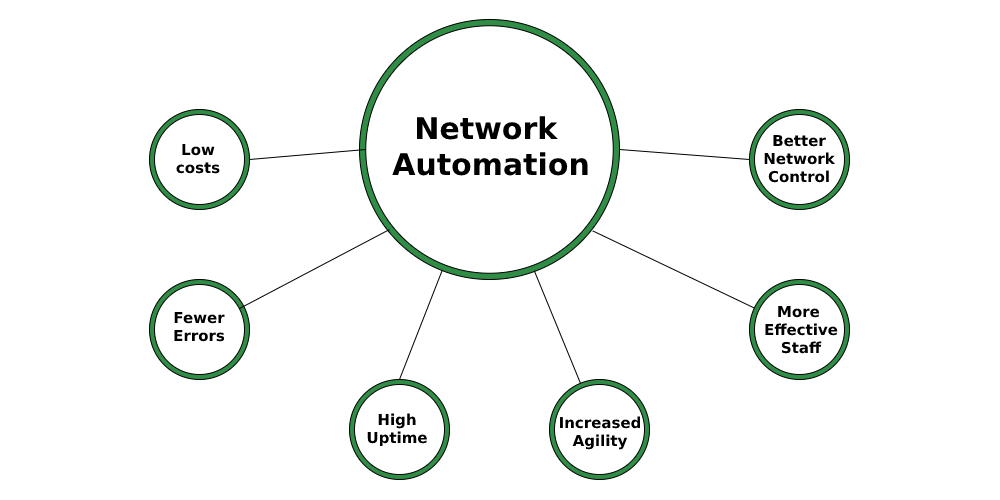

The Network Evolution: Traditional vs. Automated Infrastructure

Discover the revolution from traditional to automated network infrastructures, learn the benefits, challenges and advanced strategies for seamless transition.

10 Powerful Tips for Efficient Database Management: SQL and NoSQL Integration in Node.js

Streamline your Node.js backend by mastering the integration of SQL and NoSQL databases—these 10 practical tips will help you write cleaner, faster and more scalable data operations.

Releted Blogs

Top 7 Python Certifications for 2026 to Boost Your Career

Python continues to dominate as the most versatile programming language across AI, data science, web development and automation. If you’re aiming for a career upgrade, a pay raise or even your very first developer role, the right Python certification can be a game-changer. In this guide, we’ll explore the top 7 Python certifications for 2026 from platforms like Coursera, Udemy and LinkedIn Learning—an ROI-focused roadmap for students, career switchers and junior devs.

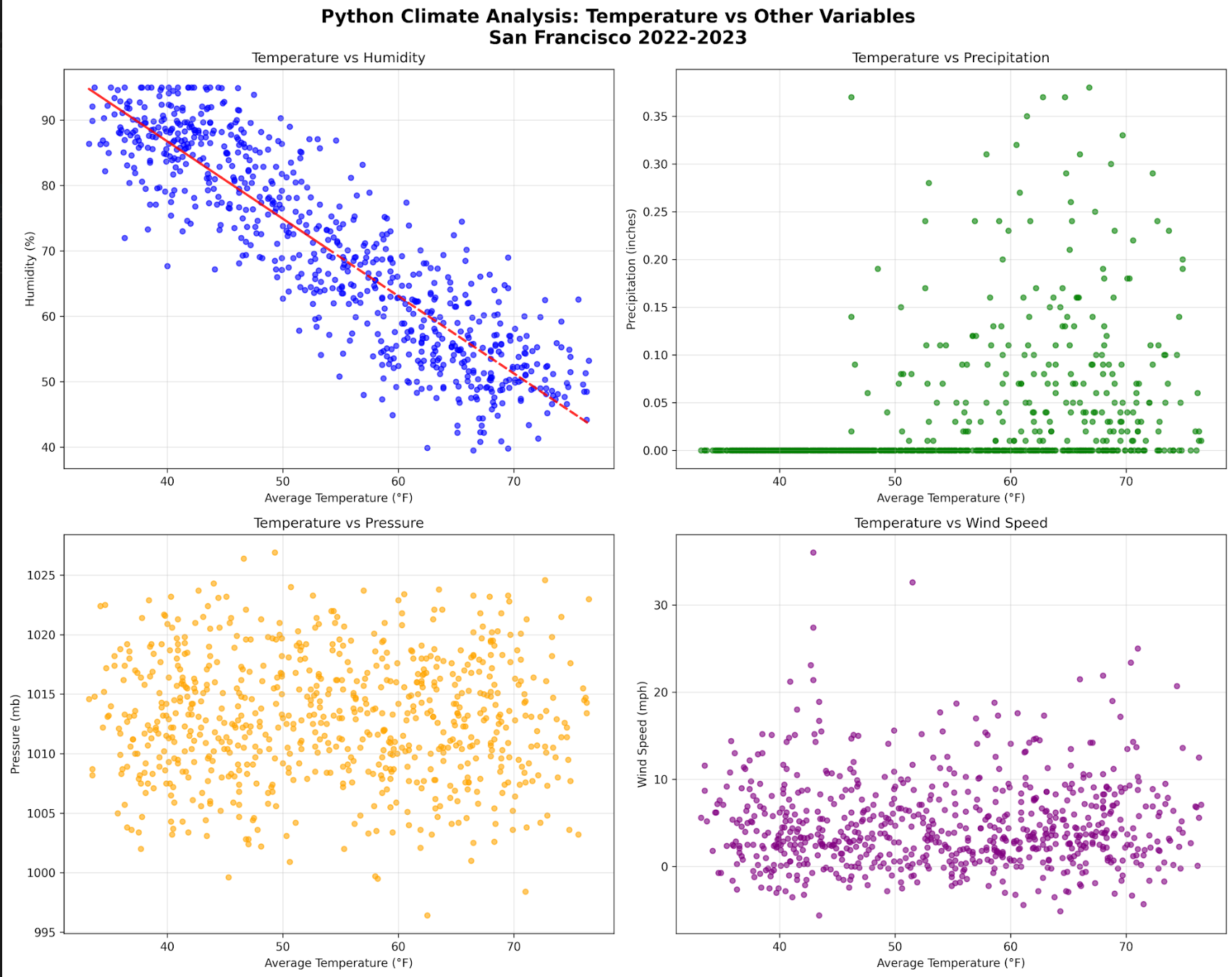

Python vs R vs SQL: Choosing Your Climate Data Stack

Delve into the intricacies of data analysis within climate science by exploring the comparative strengths of Python, R and SQL. This article will guide you through selecting the right tools for your climate data needs, ensuring efficient handling of complex datasets.

🚀 Mastering Python Automation in 2025: Deep Insights, Real-World Use Cases & Secure Best Practices

Streamline your workflows, eliminate manual overhead and secure your automation pipelines with Python — the most powerful tool in your 2025 toolkit.

Data Validation in Machine Learning Pipelines: Catching Bad Data Before It Breaks Your Model

In the rapidly evolving landscape of machine learning, ensuring data quality is paramount. Data validation acts as a safeguard, helping data scientists and engineers catch errors before they compromise model performance. This article delves into the importance of data validation, various techniques to implement it, and best practices for creating robust machine learning pipelines. We will explore real-world case studies, industry trends, and practical advice to enhance your understanding and implementation of data validation.

MongoDB Insights in 2025: Unlock Powerful Data Analysis and Secure Your Database from Injection Attacks

MongoDB powers modern backend applications with flexibility and scalability, but growing data complexity demands better monitoring and security. MongoDB Insights tools provide critical visibility into query performance and help safeguard against injection attacks. This guide explores how to leverage these features for optimized, secure Python backends in 2025.

Best Cloud Hosting for Python Developers in 2025 (AWS vs GCP vs DigitalOcean)

Finding the Right Python Cloud Hosting in 2025 — Without the Headaches Choosing cloud hosting as a Python developer in 2025 is no longer just about uptime or bandwidth. It’s about developer experience, cost efficiency and scaling with minimal friction. In this guide, we’ll break down the top options — AWS, GCP and DigitalOcean — and help you make an informed choice for your projects.

Have a story to tell?

Join our community of writers and share your insights with the world.

Start Writing