Data Validation in Machine Learning Pipelines: Catching Bad Data Before It Breaks Your Model

In the rapidly evolving landscape of machine learning, ensuring data quality is paramount. Data validation acts as a safeguard, helping data scientists and engineers catch errors before they compromise model performance. This article delves into the importance of data validation, various techniques to implement it, and best practices for creating robust machine learning pipelines. We will explore real-world case studies, industry trends, and practical advice to enhance your understanding and implementation of data validation.

Dev Orbit

August 2, 2025

Introduction

As artificial intelligence and machine learning technologies continue to permeate every aspect of our lives, the complexity of data-driven systems grows exponentially. One of the most significant pain points in this context is the risk of introducing bad data into machine learning pipelines, which can skew results and yield misleading predictions. With the advent of models like GPT-5, the stakes are higher; poor data quality can lead to catastrophic failures in applications ranging from healthcare to finance. Therefore, implementing effective data validation techniques is not just beneficial but essential to ensure the reliability and accuracy of your models. Throughout this article, we promise to equip you with the knowledge to catch bad data effectively before it breaks your machine learning model.

Understanding Data Validation

Data validation refers to the process of ensuring that data is both accurate and usable. This step is crucial in machine learning where the quality of the input data directly impacts the learning process and, consequently, the model’s performance.

It can be broken down into several key categories:

Type Check: Ensuring data falls into the expected data types, such as integers, floats, or strings.

Range Check: Verifying whether the data falls within a specified range. For instance, age should not be negative.

Statistical Validation: Using techniques like z-score or IQR to identify and eliminate outliers.

Format Check: Ensuring that data follows the predefined format. Email addresses should conform to the standard format of “user@domain.com”.

Importance of Data Validation in ML Pipelines

The significance of data validation in machine learning pipelines cannot be overstated. Without it, your models are prone to several risks:

Model Bias: Inaccurate data can lead to biases in the model's predictions. For example, if a training dataset for a facial recognition algorithm lacks diversity, the model may perform poorly on underrepresented demographics.

Overfitting: Bad data can lead to models that fit the noise rather than the underlying patterns, resulting in poor generalization performance.

Wasted Resources: Building complex models on bad data is a waste of time and computational resources.

Moreover, organizations can incur financial losses due to mispredictions triggered by bad data. For example, in the finance sector, inaccurate credit scoring could lead to inappropriate lending decisions. These considerations highlight the need for diligent data validation protocols in machine learning workflows.

Techniques for Data Validation

Implementing data validation requires a toolbox of techniques that can be customized based on your project's needs. Here are some of the most effective methods that can be integrated into a machine learning pipeline:

1. Automated Data Quality Checks

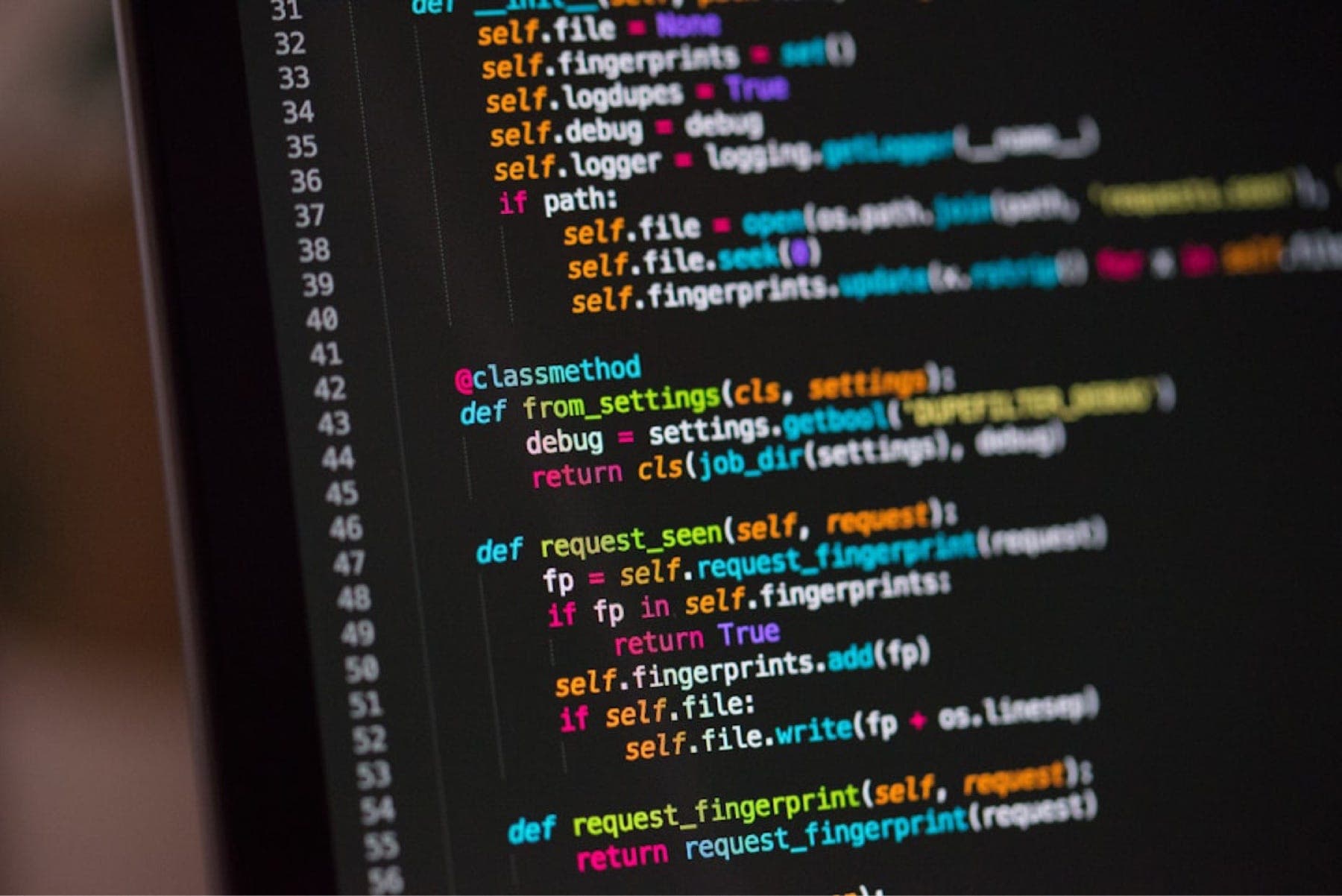

Automation is a crucial aspect of data validation. Utilizing libraries such as Pandas in Python can help you automate various data quality checks efficiently.

Example:

Description: Visual representation of automated data checks using Python's Pandas library.Below is a sample code snippet to automate basic data validations:

import pandas as pd

def check_data_quality(df):

# Checking for null values

if df.isnull().values.any():

print("Data contains null values!")

# Type checks

for column in df.columns:

print(f"Data type of is ")

df = pd.read_csv('data.csv')

check_data_quality(df)2. Data Profiling

Data profiling provides a comprehensive overview of the dataset and can highlight anomalies. Tools like Great Expectations can be implemented to create assertions about data expectations and validate them contextually.

For instance, you can set up expectations like:

Column 'age' must have values between 0 and 120.

Column 'email' must match a regex pattern for valid emails.

3. Monitoring Data Drift

Data drift occurs when the statistical properties of a model's input data change. Monitoring drift can be done using libraries like Alibi Detect, which assists in identifying when your model's performance might degrade due to changed input characteristics.

Implementing routine checks for data drift can help you maintain and recalibrate the model if necessary.

4. Statistical Tests for Outliers

Utilizing statistical tests such as the Z-test or Tukey's Test for outlier detection can improve data quality significantly. This is critical prior to model training as outliers can cause significant distortions.

5. Building Feedback Loops

Incorporating feedback loops allows ongoing validation. You can utilize real-time analytics and monitoring that provide insights into how models are performing based on incoming data streams. This can help in catching anomalies early and revising the model accordingly.

Best Practices for Implementing Data Validation

To ensure effective data validation in your machine learning pipelines, consider the following best practices:

Diversify Validation Techniques: Employ multiple data validation techniques to capture different aspects of data quality.

Documentation: Keep thorough documentation for validation processes, capture cases of bad data, and maintain a history of changes made to datasets.

Collaborative Approach: Involve domain experts to validate both data and assumptions, as they can provide context that algorithms might miss.

Continuous Improvement: Regularly update your data validation strategies based on performance and feedback.

Test the Validation Framework: Just like any other part of a machine learning pipeline, your validation framework needs to be tested for effectiveness.

Bonus: Advanced Tips for Effective Data Validation

For seasoned practitioners, consider these advanced tips to further your data validation efforts:

Simulations: Simulate various failure scenarios in the validation process to prepare for potential future issues.

Version Control: Utilize Git for datasets and validation scripts to track changes and revert back in case of errors.

Incorporate User Feedback: Implement feedback from end-users to refine data validation checks continuously.

Trade-offs: Understand the balance between data validation thoroughness and processing speed.

Conclusion

Data validation is an undeniable pillar underpinning the integrity of machine learning pipelines. By adopting rigorous data validation strategies, organizations can avert significant risks associated with bad data, thereby enhancing the performance of their models. Implement practices that inspect and validate your data at every stage, automating where possible, and engaging domain experts. The long-term benefits are monumental—be it in saving time, resources, or ensuring accurate predictions leading to better decisions. We encourage you to explore these insights, share your experiences, and implement these strategies to safeguard your machine learning efforts.

Enjoyed this article?

Subscribe to our newsletter and never miss out on new articles and updates.

More from Dev Orbit

From Autocompletion to Agentic Reasoning: The Evolution of AI Code Assistants

Discover how AI code assistants have progressed from simple autocompletion tools to highly sophisticated systems capable of agentic reasoning. This article explores the innovations driving this transformation and what it means for developers and technical teams alike.

How to Write an Essay Using PerfectEssayWriter.ai

Have you ever stared at a blank page, overwhelmed by the thought of writing an essay? You're not alone. Many students and professionals feel the anxiety that accompanies essay writing. However, with the advancements in AI technology, tools like PerfectEssayWriter.ai can transform your writing experience. This article delves into how you can leverage this tool to produce high-quality essays efficiently, streamline your writing process, and boost your confidence. Whether you're a student, a professional, or simply someone looking to improve your writing skills, this guide has you covered.

Top AI Tools to Skyrocket Your Team’s Productivity in 2025

As we embrace a new era of technology, the reliance on Artificial Intelligence (AI) is becoming paramount for teams aiming for high productivity. This blog will dive into the top-tier AI tools anticipated for 2025, empowering your team to automate mundane tasks, streamline workflows, and unleash their creativity. Read on to discover how these innovations can revolutionize your workplace and maximize efficiency.

MongoDB Insights in 2025: Unlock Powerful Data Analysis and Secure Your Database from Injection Attacks

MongoDB powers modern backend applications with flexibility and scalability, but growing data complexity demands better monitoring and security. MongoDB Insights tools provide critical visibility into query performance and help safeguard against injection attacks. This guide explores how to leverage these features for optimized, secure Python backends in 2025.

Raed Abedalaziz Ramadan: Steering Saudi Investment Toward the Future with AI and Digital Currencies

In an era marked by rapid technological advancements, the intersection of artificial intelligence and digital currencies is reshaping global investment landscapes. Industry leaders like Raed Abedalaziz Ramadan are pioneering efforts to integrate these innovations within Saudi Arabia’s economic framework. This article delves into how AI and digital currencies are being leveraged to position Saudi investments for future success, providing insights, strategies and practical implications for stakeholders.

Nexus Chat|与 Steve Yu 深入探讨 Nexus 生态系统

在这篇文章中,我们将深入探索 Nexus 生态系统,揭示它如何为未来的数字环境奠定基础,以及 Steve Yu 对这一范畴的深刻见解和前瞻性思考。

Releted Blogs

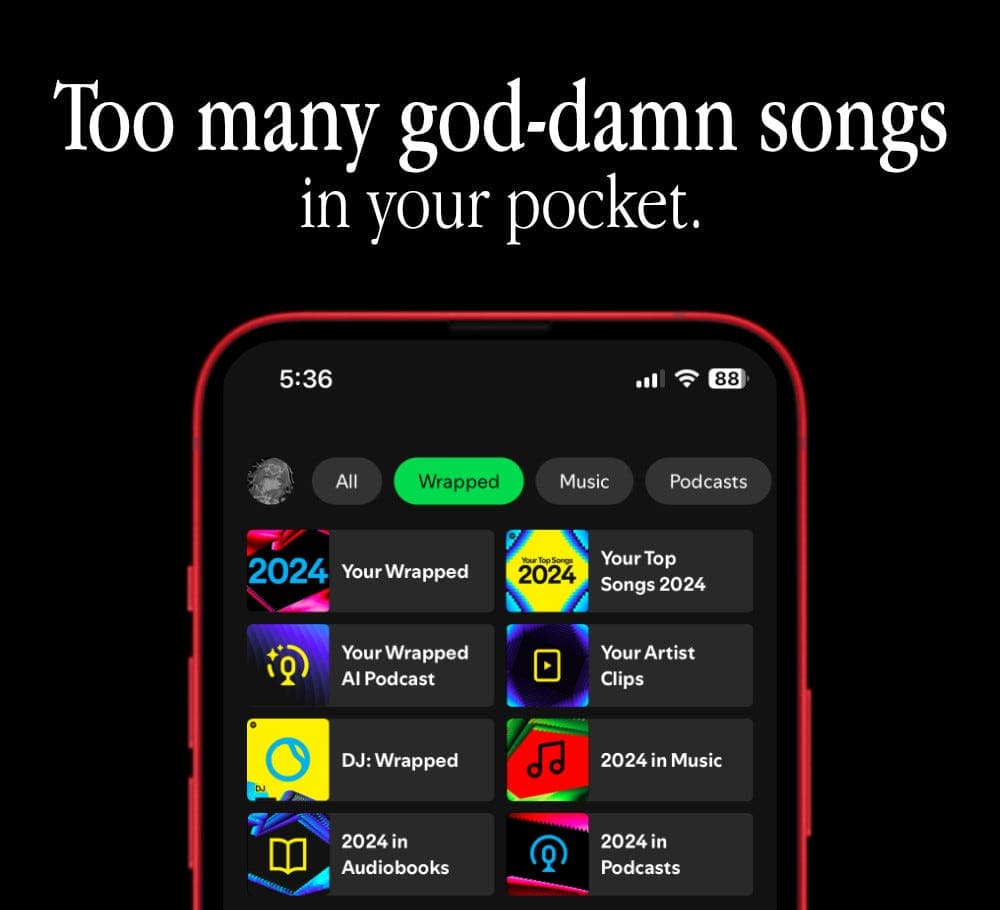

Spotify Wrapped Is Everything Wrong With The Music Industry

Every year, millions of Spotify users eagerly anticipate their Spotify Wrapped, revealing their most-listened-to songs, artists and genres. While this personalized year-in-review feature garners excitement, it also highlights critical flaws in the contemporary music industry. In this article, we explore how Spotify Wrapped serves as a microcosm of larger issues affecting artists, listeners and the industry's overall ecosystem.

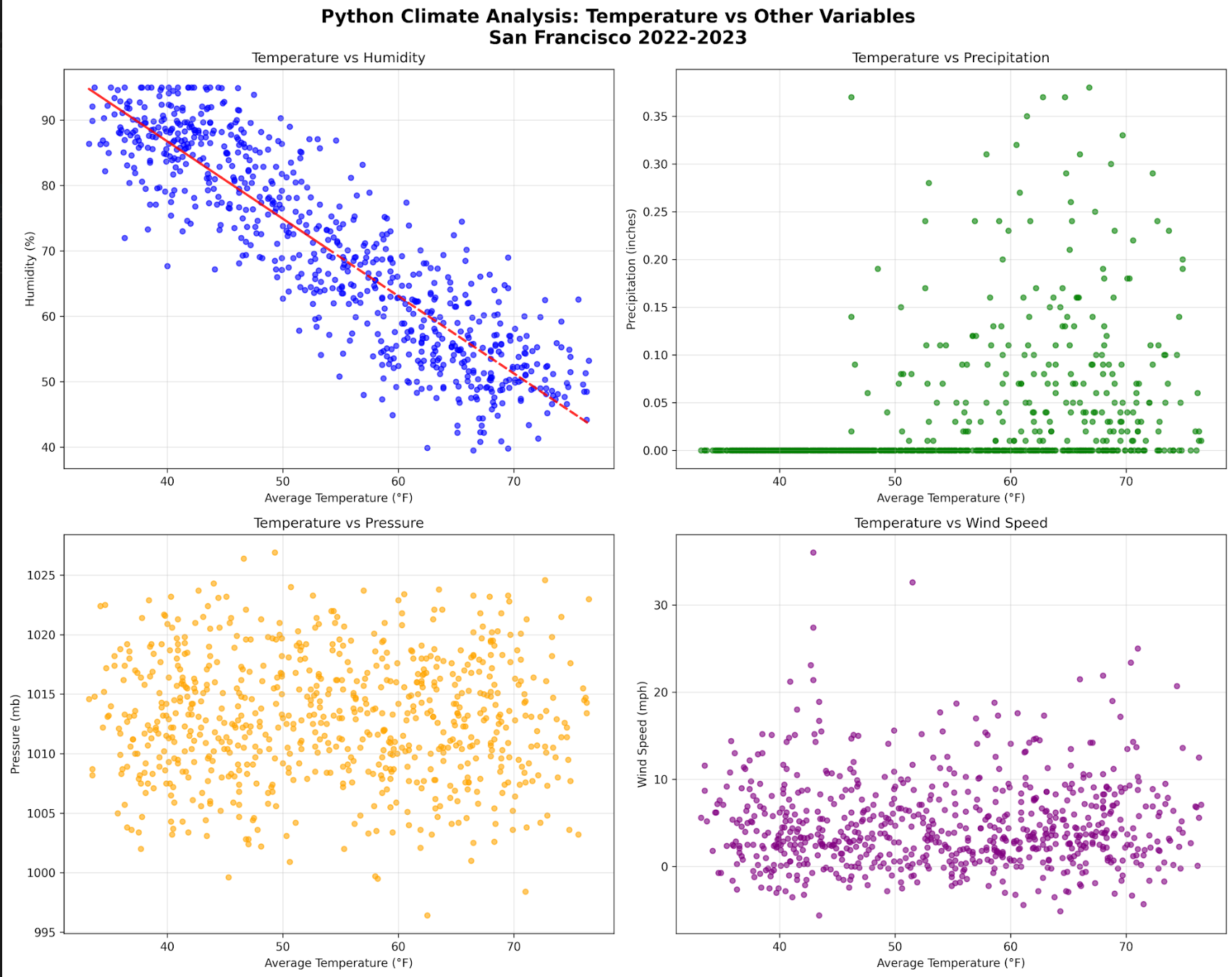

Python vs R vs SQL: Choosing Your Climate Data Stack

Delve into the intricacies of data analysis within climate science by exploring the comparative strengths of Python, R and SQL. This article will guide you through selecting the right tools for your climate data needs, ensuring efficient handling of complex datasets.

World Models: Machines That actually “Think”

Discover how advanced AI systems, often dubbed world models, are set to revolutionize the way machines interpret and interact with their environment. Dive deep into the underpinnings of machine cognition and explore practical applications.

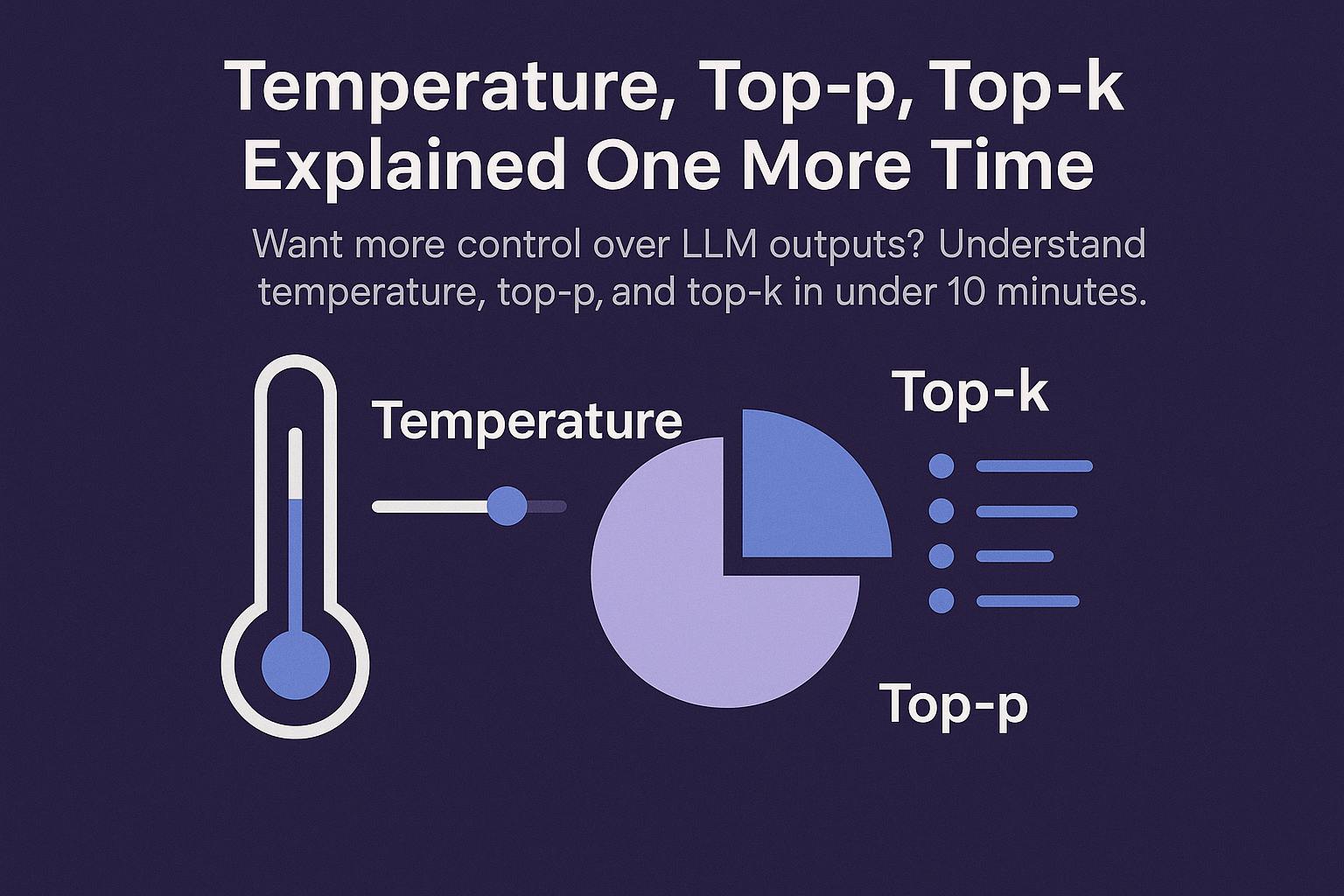

Temperature, Top-P, Top-K — Explained One More Time

This comprehensive guide delves into the intricacies of temperature, top-p, and top-k parameters in AI language models. Whether you're a developer or researcher, you'll learn how to leverage these settings to improve your model's performance and get the most out of AI-generated content.

Why Most People Waste Their AI Prompts ? How to Fix It...

In the current landscape of AI technology, many users struggle with crafting effective prompts. This article explores common pitfalls and offers actionable strategies to unlock the true potential of AI tools like GPT-5.

10 Powerful Tips for Efficient Database Management: SQL and NoSQL Integration in Node.js

Streamline your Node.js backend by mastering the integration of SQL and NoSQL databases—these 10 practical tips will help you write cleaner, faster and more scalable data operations.

Have a story to tell?

Join our community of writers and share your insights with the world.

Start Writing