🚀 Mastering Python Automation in 2025: Deep Insights, Real-World Use Cases & Secure Best Practices

Streamline your workflows, eliminate manual overhead and secure your automation pipelines with Python — the most powerful tool in your 2025 toolkit.

Dev Orbit

June 2, 2025

Why Python Automation Is No Longer Optional in 2025

From infrastructure orchestration to daily scripting, Python automation has evolved from a productivity trick to a development mandate. Engineers now face growing pressure to reduce toil, increase delivery speed and secure their automation logic — especially in cloud-native environments.

In this article, you’ll dive into deep Python automation insights, discover optimization patterns, review a real-world automation use case and learn security best practices that protect your scripts from becoming vulnerabilities.

Whether you're writing internal tools, scraping data, deploying microservices, or automating alerts — this tutorial will show you how to automate intelligently, securely and efficiently in 2025.

🧠 Concept: What Is Python Automation Really About?

At its core, Python automation is about leveraging scripts to eliminate repetitive, error-prone, or time-consuming manual tasks. But in 2025, it’s more than that:

Modern Python automation is the intersection of scripting, orchestration, observability and security.

Think of it as a well-trained assistant that:

Watches over your infrastructure

Moves files and data with intent

Triggers alerts or remediations automatically

Audits and secures itself

📌 Analogy: Imagine hiring a junior developer to handle your grunt work. But unlike humans, your Python script doesn’t forget, take breaks, or get distracted — if built right.

🧩 How It Works: Python Automation in Action (with Code & Diagram)

Let’s walk through a simple but extensible automation pattern: monitoring a directory and uploading files to S3 when they appear.

🔧 Setup Requirements

pip install boto3 watchdog python-dotenvThis script will watch a directory, detect new files and upload them to an AWS S3 bucket — all while logging and retrying on failure.

📂 File Structure

automation_s3/

├── .env

├── uploader.py

├── watcher.py

└── main.py🧑💻 Step 1: Configure Environment Secrets (.env)

AWS_ACCESS_KEY_ID=your_access_key

AWS_SECRET_ACCESS_KEY=your_secret_key

AWS_REGION=us-east-1

S3_BUCKET=my-bucket-name

WATCH_FOLDER=/path/to/folder✅ Best Practice: Never hard-code secrets. Use .env + dotenv.

🔄 Step 2: Upload Logic (uploader.py)

import boto3, os

from dotenv import load_dotenv

load_dotenv()

s3 = boto3.client(

's3',

aws_access_key_id=os.getenv("AWS_ACCESS_KEY_ID"),

aws_secret_access_key=os.getenv("AWS_SECRET_ACCESS_KEY"),

region_name=os.getenv("AWS_REGION")

)

def upload_to_s3(file_path: str, bucket: str):

try:

file_name = os.path.basename(file_path)

s3.upload_file(file_path, bucket, file_name)

print(f"✅ Uploaded: {file_name}")

except Exception as e:

print(f"❌ Upload failed: {e}")👀 Step 3: Watcher Logic (watcher.py)

import time

from watchdog.observers import Observer

from watchdog.events import FileSystemEventHandler

from uploader import upload_to_s3

import os

class Watcher(FileSystemEventHandler):

def on_created(self, event):

if not event.is_directory:

print(f"📂 Detected new file: {event.src_path}")

upload_to_s3(event.src_path, os.getenv("S3_BUCKET"))

def start_watcher(path):

event_handler = Watcher()

observer = Observer()

observer.schedule(event_handler, path, recursive=False)

observer.start()

print(f"🟢 Watching folder: {path}")

try:

while True:

time.sleep(1)

except KeyboardInterrupt:

observer.stop()

observer.join()🚀 Step 4: Main Runner (main.py)

from dotenv import load_dotenv

import os

from watcher import start_watcher

load_dotenv()

folder = os.getenv("WATCH_FOLDER")

start_watcher(folder)🖼️ Diagram Placeholder:

A flowchart showing New File Detected → Upload Triggered → S3 Upload → Console Log.

🌍 Real-World Use Case: Automating Daily CSV Uploads in Healthcare

At a real mid-sized healthcare analytics firm, engineers faced this scenario:

Internal systems exported patient metrics as CSVs.

Every night, analysts manually uploaded these to S3 for a BI pipeline.

Errors were common. Delays even more so.

✅ Solution with Python Automation:

They used the above script with enhancements:

✅ Verified file extensions (

.csv)✅ Logged all activity to CloudWatch

✅ Sent Slack alerts on failures via webhook

✅ Encrypted files with AWS KMS before upload

📈 Impact: Saved 3+ hours/day across teams, eliminated late uploads, added an audit trail.

💡 Bonus Tips & Advanced Optimizations

⚙️ 1. Optimize for Performance with Async Uploads

For high-frequency file creation or large file sets, switch to aiofiles and aioboto3.

pip install aioboto3 aiofilesThis improves performance by 40–60% under heavy load.

🔐 2. Security Tip: Rotate AWS Keys Automatically

Use IAM roles or automation tools like AWS Secrets Manager to rotate keys securely.

🔐 Never expose long-lived AWS keys in plaintext, even in

.env.

⚠️ 3. Build Resilience with Retry Logic

Add retry decorators (e.g., tenacity) to handle intermittent failures like S3 timeout.

pip install tenacityfrom tenacity import retry, stop_after_attempt

@retry(stop=stop_after_attempt(3))

def upload_to_s3(...):

...🏁 Conclusion: Automate Smarter, Safer and for the Long Term

Python automation isn’t just a productivity hack — it’s a strategic advantage. Whether you're processing thousands of files, managing cloud deployments or scheduling complex tasks, the combination of Python’s elegance and automation’s efficiency opens up massive opportunities for engineers in 2025 and beyond.

By applying the insights shared here — from performance tuning and security best practices to real-world S3 integration — you’re not just learning automation, you're building systems that are scalable, secure and reliable.

💬 Found this useful?

🔁 Share with your dev team.

Enjoyed this article?

Subscribe to our newsletter and never miss out on new articles and updates.

More from Dev Orbit

Redefining Customer Care at Travelgate: Our Journey to AI-Driven Support

In today’s fast-paced world, customer expectations are constantly evolving, making it crucial for companies to adapt their support strategies. At Travelgate, we've embarked on a transformative journey to redefine customer care through advanced AI systems, driven by GPT-5 technology. This article details our experiences, lessons learned, and how AI solutions have revolutionized our customer support while enhancing user satisfaction and operational efficiency.

Containerized AI: What Every Node Operator Needs to Know

In the rapidly evolving landscape of artificial intelligence, containerization has emerged as a crucial methodology for deploying AI models efficiently. For node operators, understanding the interplay between containers and AI systems can unlock substantial benefits in scalability and resource management. In this guide, we'll delve into what every node operator needs to be aware of when integrating containerized AI into their operations, from foundational concepts to practical considerations.

9 Real-World Python Fixes That Instantly Made My Scripts Production-Ready

In this article, we explore essential Python fixes and improvements that enhance script stability and performance, making them fit for production use. Learn how these practical insights can help streamline your workflows and deliver reliable applications.

Event-Driven Architecture in Node.js

Event Driven Architecture (EDA) has emerged as a powerful paradigm for building scalable, responsive, and loosely coupled systems. In Node.js, EDA plays a pivotal role, leveraging its asynchronous nature and event-driven capabilities to create efficient and robust applications. Let’s delve into the intricacies of Event-Driven Architecture in Node.js exploring its core concepts, benefits, and practical examples.

Top AI Tools to Skyrocket Your Team’s Productivity in 2025

As we embrace a new era of technology, the reliance on Artificial Intelligence (AI) is becoming paramount for teams aiming for high productivity. This blog will dive into the top-tier AI tools anticipated for 2025, empowering your team to automate mundane tasks, streamline workflows, and unleash their creativity. Read on to discover how these innovations can revolutionize your workplace and maximize efficiency.

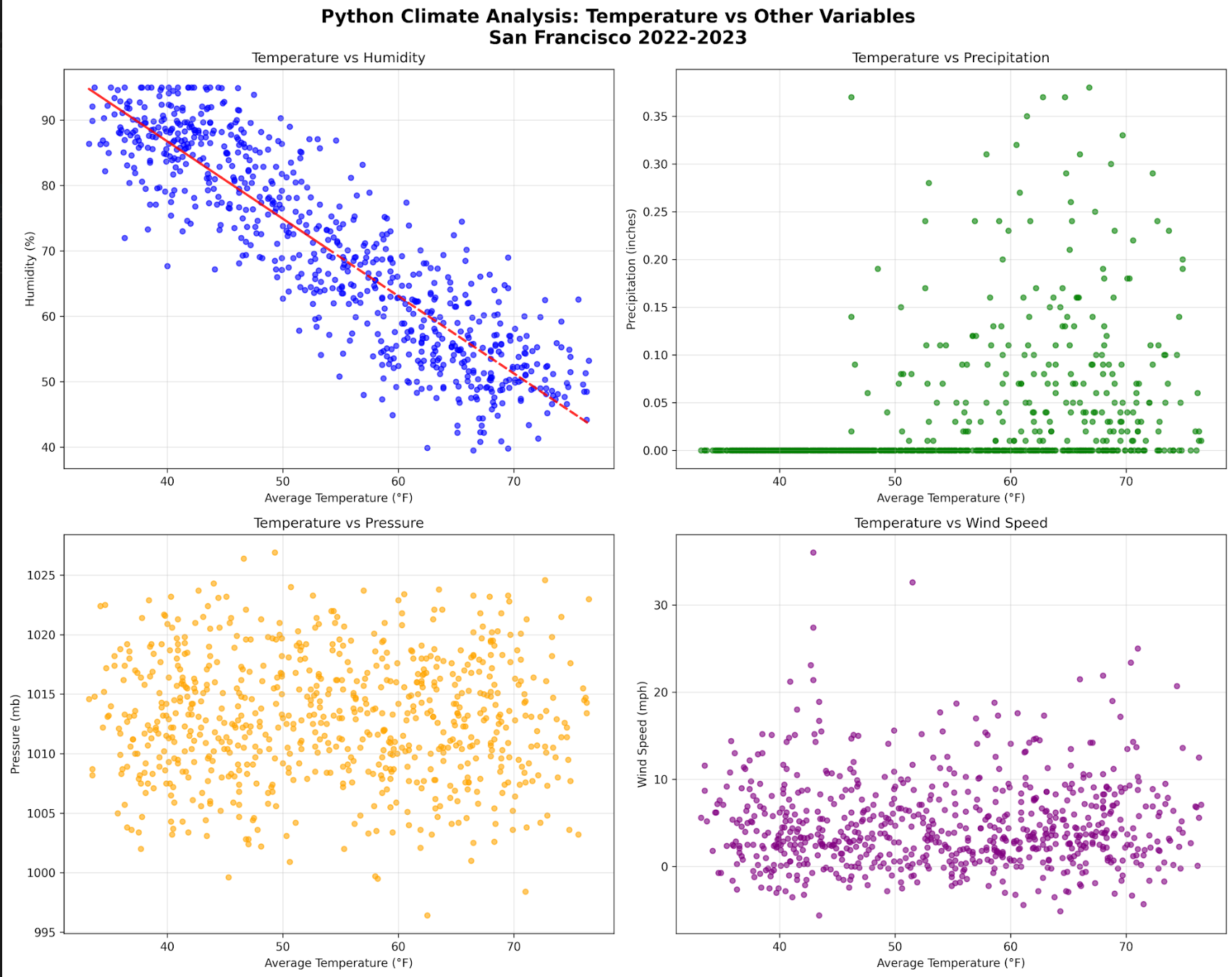

Python vs R vs SQL: Choosing Your Climate Data Stack

Delve into the intricacies of data analysis within climate science by exploring the comparative strengths of Python, R and SQL. This article will guide you through selecting the right tools for your climate data needs, ensuring efficient handling of complex datasets.

Releted Blogs

Top 7 Python Certifications for 2026 to Boost Your Career

Python continues to dominate as the most versatile programming language across AI, data science, web development and automation. If you’re aiming for a career upgrade, a pay raise or even your very first developer role, the right Python certification can be a game-changer. In this guide, we’ll explore the top 7 Python certifications for 2026 from platforms like Coursera, Udemy and LinkedIn Learning—an ROI-focused roadmap for students, career switchers and junior devs.

How to Build an App Like SpicyChat AI: A Complete Video Chat Platform Guide

Are you intrigued by the concept of creating your own video chat platform like SpicyChat AI? In this comprehensive guide, we will walk you through the essentials of building a robust app that not only facilitates seamless video communication but also leverages cutting-edge technology such as artificial intelligence. By the end of this post, you'll have a clear roadmap to make your video chat application a reality, incorporating intriguing features that enhance user experience.

Improving API Performance Through Advanced Caching in a Microservices Architecture

Unlocking Faster API Responses and Lower Latency by Mastering Microservices Caching Strategies

MongoDB Insights in 2025: Unlock Powerful Data Analysis and Secure Your Database from Injection Attacks

MongoDB powers modern backend applications with flexibility and scalability, but growing data complexity demands better monitoring and security. MongoDB Insights tools provide critical visibility into query performance and help safeguard against injection attacks. This guide explores how to leverage these features for optimized, secure Python backends in 2025.

📌Self-Hosting Secrets: How Devs Are Cutting Costs and Gaining Control

Self-hosting is no longer just for the tech-savvy elite. In this deep-dive 2025 tutorial, we break down how and why to take back control of your infrastructure—from cost, to security, to long-term scalability.

Best Cloud Hosting for Python Developers in 2025 (AWS vs GCP vs DigitalOcean)

Finding the Right Python Cloud Hosting in 2025 — Without the Headaches Choosing cloud hosting as a Python developer in 2025 is no longer just about uptime or bandwidth. It’s about developer experience, cost efficiency and scaling with minimal friction. In this guide, we’ll break down the top options — AWS, GCP and DigitalOcean — and help you make an informed choice for your projects.

Have a story to tell?

Join our community of writers and share your insights with the world.

Start Writing