Temperature, Top-P, Top-K — Explained One More Time

This comprehensive guide delves into the intricacies of temperature, top-p, and top-k parameters in AI language models. Whether you're a developer or researcher, you'll learn how to leverage these settings to improve your model's performance and get the most out of AI-generated content.

Dev Orbit

July 15, 2025

Introduction

As AI technology continues to evolve, understanding its core components becomes increasingly crucial for developers and researchers alike. Among them, the temperature, top-p, and top-k parameters play significant roles in determining how models produce language. But despite their importance, many still find these concepts confusing. Leveraging improvements in models like GPT-5, it's essential to grasp these parameters' nuances to optimize your AI applications. In this blog post, we will unravel the complexities of temperature, top-p, and top-k, ensuring you walk away equipped with practical insights that can significantly enhance your language generation tasks.

What is Temperature?

Temperature is a crucial parameter that affects how creative or deterministic AI language models are when generating text. It controls the randomness of the model's output by altering the probability distribution over the predicted next words.

Understanding Temperature Dynamics

The temperature generally ranges from 0 to 1, although it can exceed 1 as well:

Low Temperature (0.0 - 0.3): When the temperature is set low, the model becomes more conservative in its predictions, favoring higher-probability words. This setting is ideal for tasks requiring factual accuracy and coherence.

Medium Temperature (0.4 - 0.7): A moderate temperature allows for a balance between creativity and consistency. It enables models to explore alternatives while staying relatively grounded in their predictions.

High Temperature (0.8 - 1.0): At high temperatures, the model’s output becomes more random and diverse. This can result in creative and surprising text but may also lead to incoherence or gibberish in some scenarios.

Real-World Application of Temperature

Consider a practical example: you're developing a creative writing tool. Setting a high temperature can generate unconventional phrases and unique storytelling arcs. Conversely, if you're designing a chatbot for customer service, a low temperature is advisable to ensure consistent and accurate responses.

Exploring Top-K Sampling

Top-k sampling is another essential strategy that affects how language models generate text. Unlike temperature, which influences the randomness of predictions, top-k sampling restricts the model to a set number of words based on their probabilities.

How Top-K Works

In top-k sampling, the model generates a list of potential next words but only considers the top k options for predictions. Here's how it can be understood:

Set "k" Value: By choosing a value for k, you can define how many top options the model will explore. For example, setting k to 10 means the model will consider the ten words with the highest probabilities.

Implications of Different k Values: A lower value for k results in more focused and potentially repetitive text, as the model narrows down to few choices. In contrast, a higher k enables diversity but might introduce erratic choices.

Top-K in Practice

Imagine a scenario where you want to build a text summarization tool. By choosing a lower top-k, you ensure the model sticks to the most relevant content, producing succinct and precise summaries. However, for a poetry generator, a higher k will result in a more diverse use of language.

Understanding Top-P (Nucleus Sampling)

Top-p sampling, also known as nucleus sampling, is similar to top-k but approaches randomness from a different angle. Instead of choosing a fixed number of top options, top-p considers a dynamically sized set of words whose cumulative probability exceeds a certain threshold.

The Mechanism Behind Top-P

In top-p sampling:

Cumulative Probability: The model evaluates words based on their probabilities and selects from the smallest group of words that adds up to at least 'p' probability. For example, with p set to 0.9, the model chooses from the smallest group of words that, together, have a cumulative probability of at least 90%.

Adaptive Variability: The beauty of top-p is its flexibility. Depending on the text and context, the number of options considered will vary, allowing for a more natural generation that reflects human-like thinking.

Practical Uses for Top-P

For applications such as conversational agents, top-p sampling can significantly improve the diversity of responses. A balance in p can ensure engaging interactions while maintaining coherence. In an AI-driven story generator, tweaking p will yield either focused narratives or expansive plots, based on your desired output.

Combining Temperature, Top-P, and Top-K

Understanding how temperature, top-p, and top-k interact is pivotal in optimizing your model’s performance. They are not mutually exclusive—using them in tandem can yield better results tailored to specific contexts.

Best Practices for Combination Usage

Experimentation: Testing different combinations will provide insights into how they affect the quality of generated text. For instance, you might find that a medium temperature alongside high top-k yields more coherent stories.

Contextual Adjustment: Development environments vary. Always adjust these parameters based on the context of usage. Experiment with lower temperatures for technical applications and higher ones for creative works.

Iterate and Evaluate: Continuous evaluation ensures that the chosen parameters align well with user needs. Collect feedback on generated content to iterate on model configurations.

Bonus/Advanced Tips

Maximizing the effectiveness of temperature, top-p, and top-k parameters requires more than just basic understanding. Here are some advanced considerations and practices:

Advanced Considerations

Time-Based Adjustments: For applications like news generation, consider adjusting temperature and sampling parameters based upon recent significant events to keep content relevant.

Seed Values and Community Feedback: Use particular seed values or anchor phrases to guide randomness positively. Involve the community for feedback, letting user behavior inform adaptive parameter settings.

Monitoring Model Drift: Always monitor how the behavior of users evolves over time. What works now might need adjustments down the road to align with changing preferences.

Warnings and Cautions

Excessive Randomness: While high settings can create surprising content, ensure that excessive randomness doesn't lead to incoherent outputs. It's crucial to find a balance.

Overfitting to User Preferences: Adjusting parameters based merely on user feedback may lead to overfitting, which can compromise model performance over time.

Conclusion

In this extensive exploration of temperature, top-p, and top-k, we've unpacked how these parameters shape the quality and creativity of AI-generated text. Understanding and effectively leveraging them can significantly enhance your applications, whether you're creating tailored chatbots or generative art. Now, take this knowledge and experiment with your own setups to discover the powerful potential inherent in these models. Share this article with your peers, drop your thoughts in the comments, and explore the creative possibilities that await!

Enjoyed this article?

Subscribe to our newsletter and never miss out on new articles and updates.

More from Dev Orbit

GitHub Copilot vs Tabnine (2025): Which AI Assistant is Best?

AI coding assistants are no longer futuristic experiments—they’re becoming essential tools in the modern developer’s workflow. In this review, we’ll compare GitHub Copilot and Tabnine head-to-head in 2025, exploring how each performs in real-world backend coding tasks. From productivity gains to code quality, we’ll answer the burning question: Which AI assistant should you trust with your code?

MongoDB Insights in 2025: Unlock Powerful Data Analysis and Secure Your Database from Injection Attacks

MongoDB powers modern backend applications with flexibility and scalability, but growing data complexity demands better monitoring and security. MongoDB Insights tools provide critical visibility into query performance and help safeguard against injection attacks. This guide explores how to leverage these features for optimized, secure Python backends in 2025.

NestJS Knex Example: Step-by-Step Guide to Building Scalable SQL Application

Are you trying to use Knex.js with NestJS but feeling lost? You're not alone. While NestJS is packed with modern features, integrating it with SQL query builders like Knex requires a bit of setup. This beginner-friendly guide walks you through how to connect Knex with NestJS from scratch, covering configuration, migrations, query examples, real-world use cases and best practices. Whether you're using PostgreSQL, MySQL or SQLite, this comprehensive tutorial will help you build powerful and scalable SQL-based applications using Knex and NestJS.

Best Cloud Hosting for Python Developers in 2025 (AWS vs GCP vs DigitalOcean)

Finding the Right Python Cloud Hosting in 2025 — Without the Headaches Choosing cloud hosting as a Python developer in 2025 is no longer just about uptime or bandwidth. It’s about developer experience, cost efficiency and scaling with minimal friction. In this guide, we’ll break down the top options — AWS, GCP and DigitalOcean — and help you make an informed choice for your projects.

Raed Abedalaziz Ramadan: Steering Saudi Investment Toward the Future with AI and Digital Currencies

In an era marked by rapid technological advancements, the intersection of artificial intelligence and digital currencies is reshaping global investment landscapes. Industry leaders like Raed Abedalaziz Ramadan are pioneering efforts to integrate these innovations within Saudi Arabia’s economic framework. This article delves into how AI and digital currencies are being leveraged to position Saudi investments for future success, providing insights, strategies and practical implications for stakeholders.

Mistral AI Enhances Le Chat with Voice Recognition and Powerful Deep Research Capabilities

In an era where communication and information retrieval are pivotal to our digital interactions, Mistral AI has raised the bar with its latest upgrades to Le Chat. By integrating sophisticated voice recognition and advanced deep research capabilities, users will experience unparalleled ease of use, as well as the ability to access in-depth information effortlessly. This article delves into how these innovations can transform user experiences and the broader implications for developers and AI engineers.

Releted Blogs

The Labels First Sued AI. Now They Want to Own It.

In the rapidly evolving landscape of artificial intelligence, a fascinating shift is underway. Music labels, once adversaries of AI applications in the music industry, are now vying for ownership and control over the very technologies they once fought against. This article delves into the complexity of this pivot, examining the implications of labels seeking to own AI and how this transition could redefine the music landscape. If you’re keen on understanding the future of music technology and the battle for ownership in an AI-driven age, read on.

Containerized AI: What Every Node Operator Needs to Know

In the rapidly evolving landscape of artificial intelligence, containerization has emerged as a crucial methodology for deploying AI models efficiently. For node operators, understanding the interplay between containers and AI systems can unlock substantial benefits in scalability and resource management. In this guide, we'll delve into what every node operator needs to be aware of when integrating containerized AI into their operations, from foundational concepts to practical considerations.

Data Validation in Machine Learning Pipelines: Catching Bad Data Before It Breaks Your Model

In the rapidly evolving landscape of machine learning, ensuring data quality is paramount. Data validation acts as a safeguard, helping data scientists and engineers catch errors before they compromise model performance. This article delves into the importance of data validation, various techniques to implement it, and best practices for creating robust machine learning pipelines. We will explore real-world case studies, industry trends, and practical advice to enhance your understanding and implementation of data validation.

From Autocompletion to Agentic Reasoning: The Evolution of AI Code Assistants

Discover how AI code assistants have progressed from simple autocompletion tools to highly sophisticated systems capable of agentic reasoning. This article explores the innovations driving this transformation and what it means for developers and technical teams alike.

Are AIs Becoming the New Clickbait?

In a world where online attention is gold, the battle for clicks has transformed dramatically. As artificial intelligence continues to evolve, questions arise about its influence on content creation and management. Are AIs just the modern-day clickbait artists, crafting headlines that lure us in without delivering genuine value? In this article, we delve into the fascinating relationship between AI and clickbait, exploring how advanced technologies like GPT-5 shape engagement strategies, redefine digital marketing, and what it means for consumers and content creators alike.

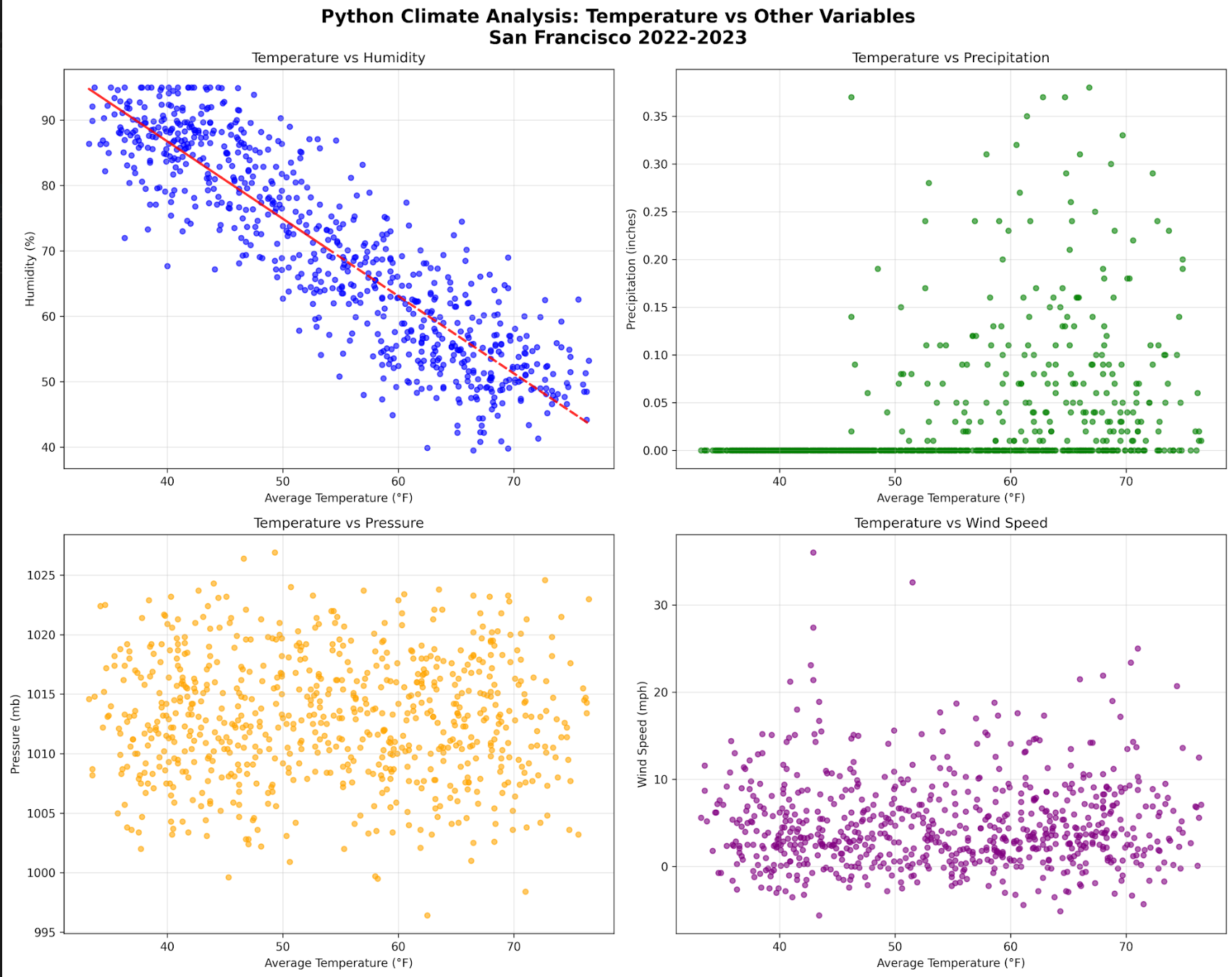

Python vs R vs SQL: Choosing Your Climate Data Stack

Delve into the intricacies of data analysis within climate science by exploring the comparative strengths of Python, R and SQL. This article will guide you through selecting the right tools for your climate data needs, ensuring efficient handling of complex datasets.

Have a story to tell?

Join our community of writers and share your insights with the world.

Start Writing